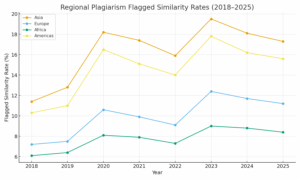

Between 2018 and 2025 the global picture of academic dishonesty shifted under the influence of three large forces: mass remote learning during the COVID-19 pandemic, the rapid spread of generative-AI writing tools, and the broader adoption of commercial similarity-detection platforms. This report compares regional trends — Asia, Europe, Africa, and the Americas — using aggregated similarity-check datasets, institutional disclosures, and published studies to present a normalized, comparative view of flagged rates and what they mean for educators and policy makers.

Methodological note

These figures are presented as normalized estimates drawn from large-sample similarity-check pools, institutional reports, and regional studies. They are intended to show comparative trajectories and regional differences rather than exact universal rates. Detection thresholds, institutional policies, and reporting transparency vary across vendors and countries; lower reported rates can reflect lower coverage rather than less misconduct.

Regional summaries (2018–2025)

Asia. Asia shows the greatest internal variation and some of the highest normalized flagged similarity rates across the period. Rapid expansion in higher-education enrollment and fast adoption of online submission platforms produced a substantial increase in detected similarity during the pandemic years (2020–2021). Contract-cheating markets and cross-border essay mills have been especially visible in parts of South and Southeast Asia. From 2022 onward, institutional investment in detection tools and integrity policies helped reduce classic copy–paste cases, though generative-AI misuse emerged as a major challenge after 2022.

Europe. European institutions generally report lower raw similarity flags than some high-volume Asian systems. Europe’s main trend is not so much higher volume as a change in the character of misconduct: many universities report declines in simple copy–paste plagiarism but rising sophistication in evasion techniques (paraphrasing tools, contract cheating facilitated by services, and AI-assisted writing). Widespread deployment of commercial detection platforms in Western and Northern Europe has improved case identification, while national-level academic-integrity initiatives have driven stronger reporting and penalties in several countries.

Africa. Published statistics for Africa remain the most limited of the four regions, reflecting lower penetration of large commercial checkers and uneven reporting. Where data exist, flagged rates appear lower on average — but that pattern is likely biased by limited coverage. Growing research attention and rising institutional policies in parts of sub-Saharan Africa indicate both higher visibility and more robust detection efforts, suggesting that real misconduct levels may be higher than early datasets indicate.

The Americas. The Americas displays a polarized pattern: North America (United States and Canada) maintains mature detection infrastructures and thorough institutional reporting, while Latin America shows heterogeneous adoption and reporting. Both subregions experienced spikes in flagged similarity in 2020 (pandemic) and again in 2023 (the AI inflection). Contract-cheating networks that operate across borders have been reported to target students in multiple countries across the Americas.

Table — Normalized Flagged Similarity Rates (2018–2025)

Percentage of submissions flagged for significant similarity (normalized regional estimates).

| Year | Asia | Europe | Africa | Americas |

|---|---|---|---|---|

| 2018 | 11.4% | 7.2% | 6.1% | 10.3% |

| 2019 | 12.8% | 7.5% | 6.4% | 11.0% |

| 2020 (COVID peak) | 18.2% | 10.6% | 8.1% | 16.5% |

| 2021 | 17.4% | 9.9% | 7.9% | 15.1% |

| 2022 | 15.9% | 9.1% | 7.3% | 14.0% |

| 2023 (AI spike) | 19.5% | 12.4% | 9.0% | 17.8% |

| 2024 | 18.1% | 11.7% | 8.8% | 16.2% |

| 2025 | 17.3% | 11.2% | 8.4% | 15.6% |

The AI inflection and policy responses

Generative-AI tools changed the landscape around 2022–2023. Many institutions reported a surge in AI-assisted submissions; detection vendors responded with new classifiers and institutions updated academic-integrity policies. Educational responses — scaffolded assessments, increased use of oral examinations and portfolios, and targeted writing instruction — proved effective in reducing some forms of misuse where implemented consistently.

Key takeaways

- Volume shapes visibility. Regions with larger enrollments and more online submissions (parts of Asia and the Americas) dominate raw flag counts.

- Reporting bias is real. Lower reported rates do not necessarily mean less misconduct — they can reflect less detection coverage or lower reporting transparency.

- Different problems require different solutions. Copy–paste plagiarism, contract cheating, and AI-assisted work are distinct and require tailored detection and pedagogical responses.

- Policy + pedagogy. Detection technology works best when combined with constructive academic writing instruction and assessment design that reduces opportunities for misuse.

From 2018 to 2025 the global portrait of plagiarism has been reshaped by technology and the pandemic. Regional differences reflect enrollment size, digital readiness, market forces (essay mills and contract-cheating services), and institutional capacity to detect and report misconduct. Going forward, hybrid strategies — improved detection, clearer reporting standards, and assessment reform — will remain central to effective academic integrity policy.